Using resources efficiently

Statistical data about resource usage are saved in the directory /home/<user name>/hpc_statistics. They serve to help the user to specify the resources necessary for a job more accurately. This allows the following goals to be achieved:

- If no more resources than necessary are requested for a job, in general, the job will start sooner.

- Because previous resource usage is used in the calculation of the priority of future jobs, accuratelly estimating resource requirements has a positive effect on this priority.

- If fewer resources are consumed due to more accurate estimation of requirements, more jobs can run simultaneously and the total throughput of the system is increased.

Job Efficiency

In order to prevent your jobs from waiting for resources which they will not actally need, it is important to estimate the requirements for your jobs as accurately as possible. This not only benefits you directly, but also increases the total throughput for the system, thus allows more jobs from all users to run in a given period.

The command to obtain efficiency information about a job is seff. E.g. for the information about job 1234546 run:

seff 123456

For a job array seff-array can be used:

seff-array 123456

Furthermore the current efficiency of all one's running jobs can be displayed by use of sujeff:

sujeff username

where username must be replace by one's own FU account name.

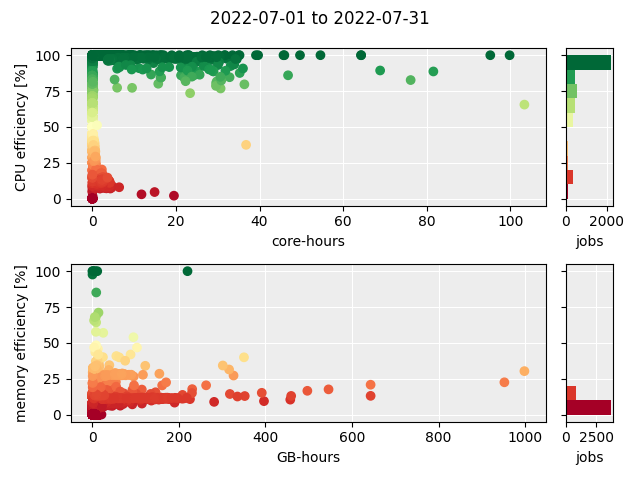

Data from the command seff are used to generate the job efficiency graphic for jobs which completed in the previous month and which comprises two scatterplots and two histograms. The resources actually used are shown as a percentage of the requested resources for the number of CPU cores and the amount of main memory (RAM), both multiplied by the run-time of the job. In general further files will exist with data for previous months.

If too many jobs display a low CPU efficiency, the following points shoud be considered:

- Are all cores which were assigned to the job actually available to the job? For a job which is not able to run on multiple nodes the option

--nodes=1must be specified. This ensures that all cores assigned to a jobs belong to a single node. - How well does the program scale? This is the question of whether the program really can make full use of the number of CPU cores which were requested. It is recommended that one runs scaling tests in which one calculates the same problem multiple times, but requests a different number or cores each time.

If the main memory effiency is low one shoud check whether less memory could be requested. The following points should be considered:

- The maximum amount of memory needed at any point during the whole run must be requested. Because exceeding this limit results in the job being terminated, this values should be calculated such that it includes a certain buffer.

- If possible, phases within the program which require different amounts of memory should be calculated in separate jobs. In this manner the amount of memory appropriate for the given phase can be selected.

Additionally efficiency information are displayed by the command jobstats, e.g.

jobstats 123456

In particular, jobstats also provides information about the usage of GPU cards, as well as suggestion for improving efficiency.

File System Usage

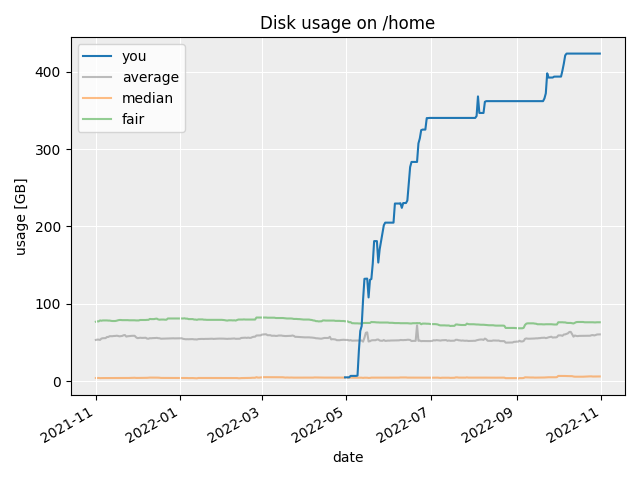

For each file system a graphic is generated which showns your useage compared with the average usage, the median useage, and fair usage, which is the amount of space each user would have if we divided the to total space on a file system by the nunber of users.

If your usage become significantly larger than the fair usage please take steps to reduce the amount of data you have.

Ways of achieving this include

- deleting data you no longer need

- moving data from

/hometo/scratch(assuming your usage on/scratchis not already excessive) - moving data off the HPC system

- packing data into compressed archives (e.g. with the command

tar)

If you have questions about the information on this page, please contact the HPC support.