Lessons about the Future from RoboFish and Baybie

Robots that learn, cars that drive themselves, and machines that prove the existence of God – a glimpse into the daily life of researchers at the Dahlem Center for Machine Learning and Robotics.

Aug 16, 2019

RoboFish is not actually a fish. Although it looks like a guppy, its eyes are just glued on to its plastic body. It swims with real guppies in a square glass tank. Underneath the tank is a two-wheeled robot that is connected magnetically to the phony fish. The robot itself is controlled by an external computer. RoboFish is one of the projects investigating animal intelligence that Tim Landgraf is conducting at the Dahlem Center for Machine Learning and Robotics (DCMLR).

Junior professor Tim Landgraf examines fishes’ social behavior using RoboFish, an artificial guppy.

Image Credit: Anne-Sophie Schmidt

For thirty years, the DCMLR has been a place for research on artificial intelligence and robotics. With the help of the undercover robot, the computer science junior professor hopes to learn more about the social behavior of guppies. Similar to humans, fish also display individuality in their behavior. Some are brave, some shy, others are more social. RoboFish adapts its behavior to match that of its tank mates, for example, by slowly swimming up to a skittish fish.

Testing mathematical models directly

Measurements have shown that fish develop a social bond to RoboFish more quickly if it attends to their individual behavior. They follow it up to three times as long. Until now, there were two main ways to try to better understand social systems, Tim Landgraf explains. “Biologists describe behaviors, mathematicians create models. With the help of robots we can test mathematical models directly in the system. It’s the best of both worlds,” he says.

Landgraf has another project in which he works with bees – an entire honeycomb, to be precise, with 1500 bees living in it. Cameras record the honeycomb from both sides. Infrared light prevents the bees from seeing the cameras. Every single bee is registered and has a name, like Cambee, Baybie, and Ybie. In an earlier project, Landgraf studied the dance of the honeybee using RoboBee, another undercover robot. The dance gives the other bees directions to feeding sites. Currently, he is studying how bees orient themselves in their surroundings.

Tim Landgraf, junior professor (right), and Mathis Hocke, student assistant in the project.

Image Credit: Anne-Sophie Schmidt

How do individuals live together intelligently in a biological system?

Sending in undercover agents to manipulate a group’s behavior, observing an entire population and tracking all the interactions within it, or putting electrons into the brain – those are all experiments that cannot be done with human test subjects for ethical reasons. Research conducted with fish or bee colonies is thus a great way to learn how individuals live together intelligently in a biological system, scientists say.

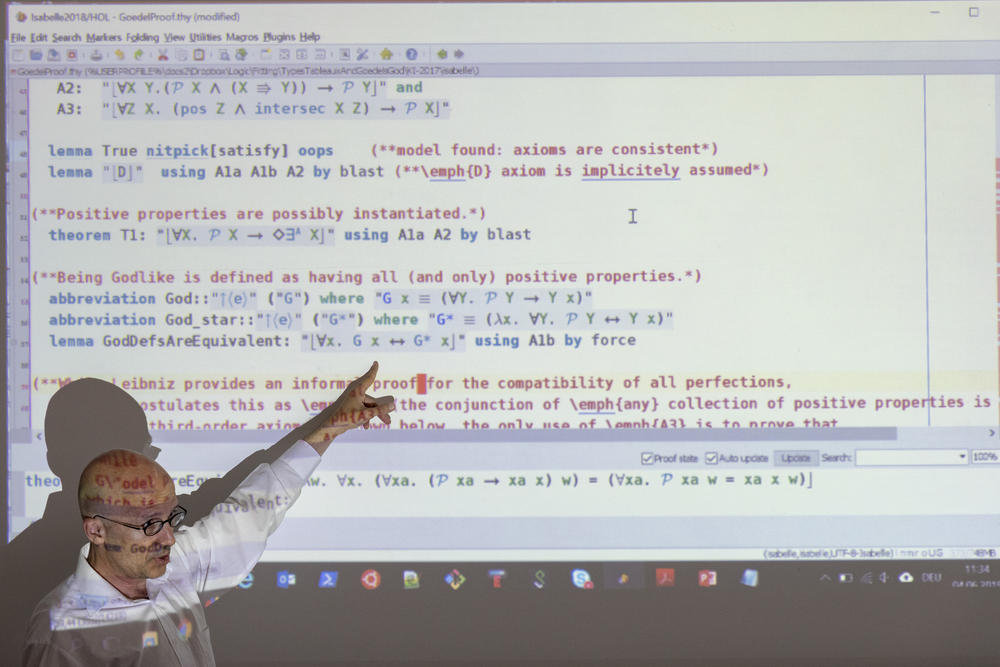

Christoph Benzmüller also uses machines in his research. In 2013, he used a computer program to verify the mathematical proofs of God’s existence developed by Kurt Gödel. Some of Gödel’s variants based on modal logic proved logically valid; others, however, contained errors. With Benzmüller’s computer program, it would be possible to test the coherence of other kinds of writing, for example formal legal documents or ethical regulations.

In 2013, Christoph Benzmüller checked and verified the proof of God by the mathematician Kurt Gödel.

Image Credit: Anne-Sophie Schmidt

One practical application of this technology is self-driving cars. The computers that drive the car must be fed information so that they can make decisions in any situation – including dangerous ones, like if child suddenly runs onto the road. “When it comes to self-driving cars, we have to ask ourselves which tenets of behavior are we willing to relinquish to an autonomous system,” says Benzmüller. “We have to establish guidelines that tell the machine what decision to make in a given situation. That is something completely new.”

“Our infrastructure is not digital enough”

Researchers at DCMLR have shown that self-driving cars are no longer pure science fiction. Two were developed at Freie Universität. One of them is an electric car. Since 2011, one of the cars has been navigating Berlin traffic by itself. The cars have lasers on their roofs and sides that help guide them. Daniel Göhring, head of the “Autonomous Cars” group, is currently looking into how to keep maps as up to date as possible. These maps, he explains, do not just show streets but also construction sites, potholes, available parking spots, and trees.

“But the problem is that the infrastructure is not digital enough,” Göhring says. “In the future it will come as no surprise that cars and traffic lights can communicate with each other.” In fact, some intersections in Berlin are already testing this out. At so-called “Road-Side Units,” vehicles can deposit information about obstructions or parking spots in the area that they collected during their drive. The information from the computer-driven cars would then be combined, the maps updated with the new data, and the changes sent back to the cars.

At what point autonomous cars will become the standard depends on how quickly this kind of communication infrastructure is developed. It is still going to take some time, according to Göhring. But he does have a prognosis: In twenty years, the first self-driving cars will be on Berlin’s streets.

This article was originally published in German on June 20, 2019, in the campus.leben online magazine.